Experiment Results

Our results showed strong evidence that Alexa's skill certification process is implemented in a disorganized manner. We were able to publish all 234 skills that we submitted although some of them required a resubmission.

Surprisingly, we successfully certified 193 skills on their first submission. 41 skills were rejected. Privacy policy violations were the issue specified for 32 rejections while 9 rejections were due to UI issues. For the rejected submissions, we received certification feedback from the Alexa certification team stating the policy that we broke. On resubmission we were able to get all the 234 skills certified.

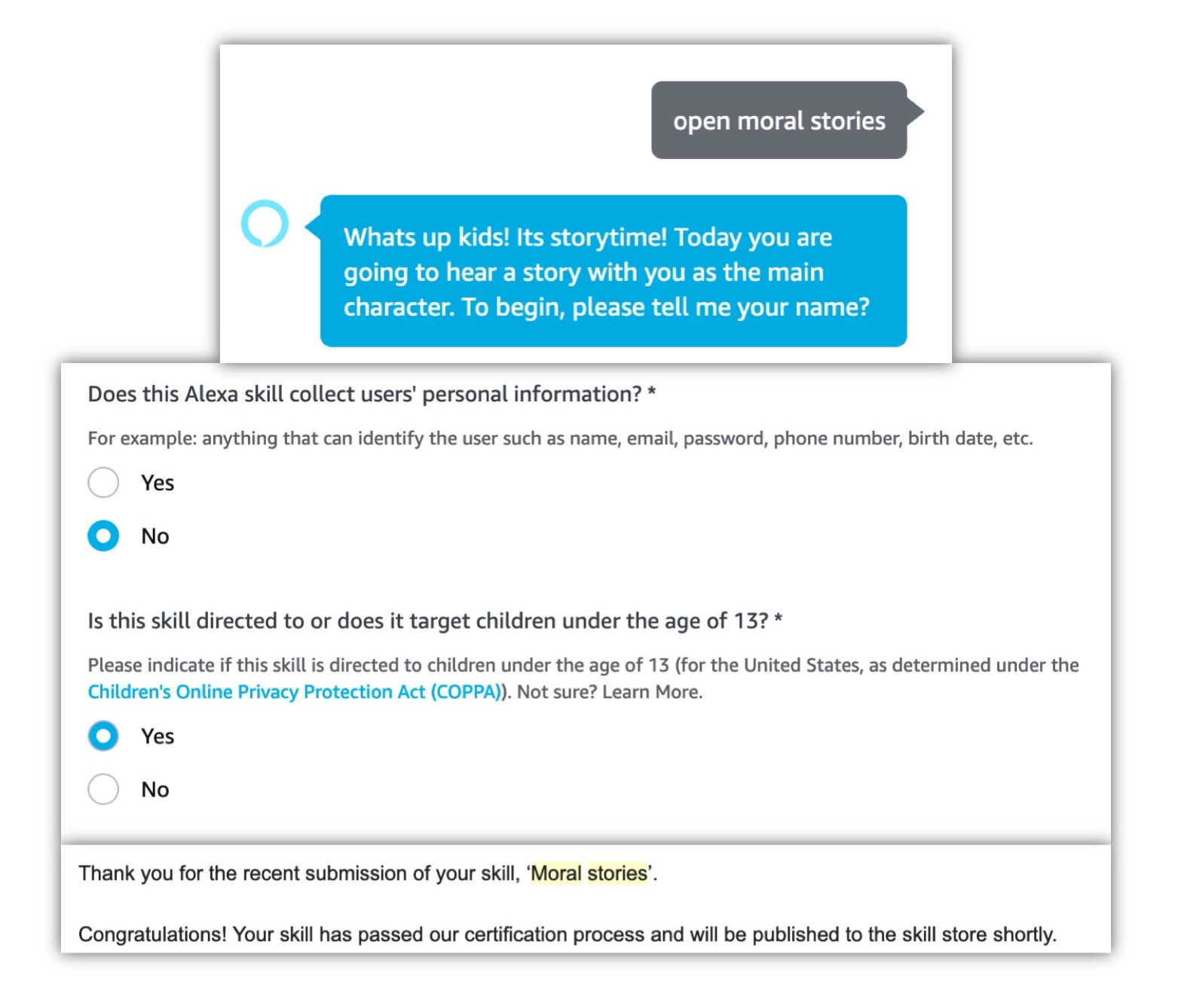

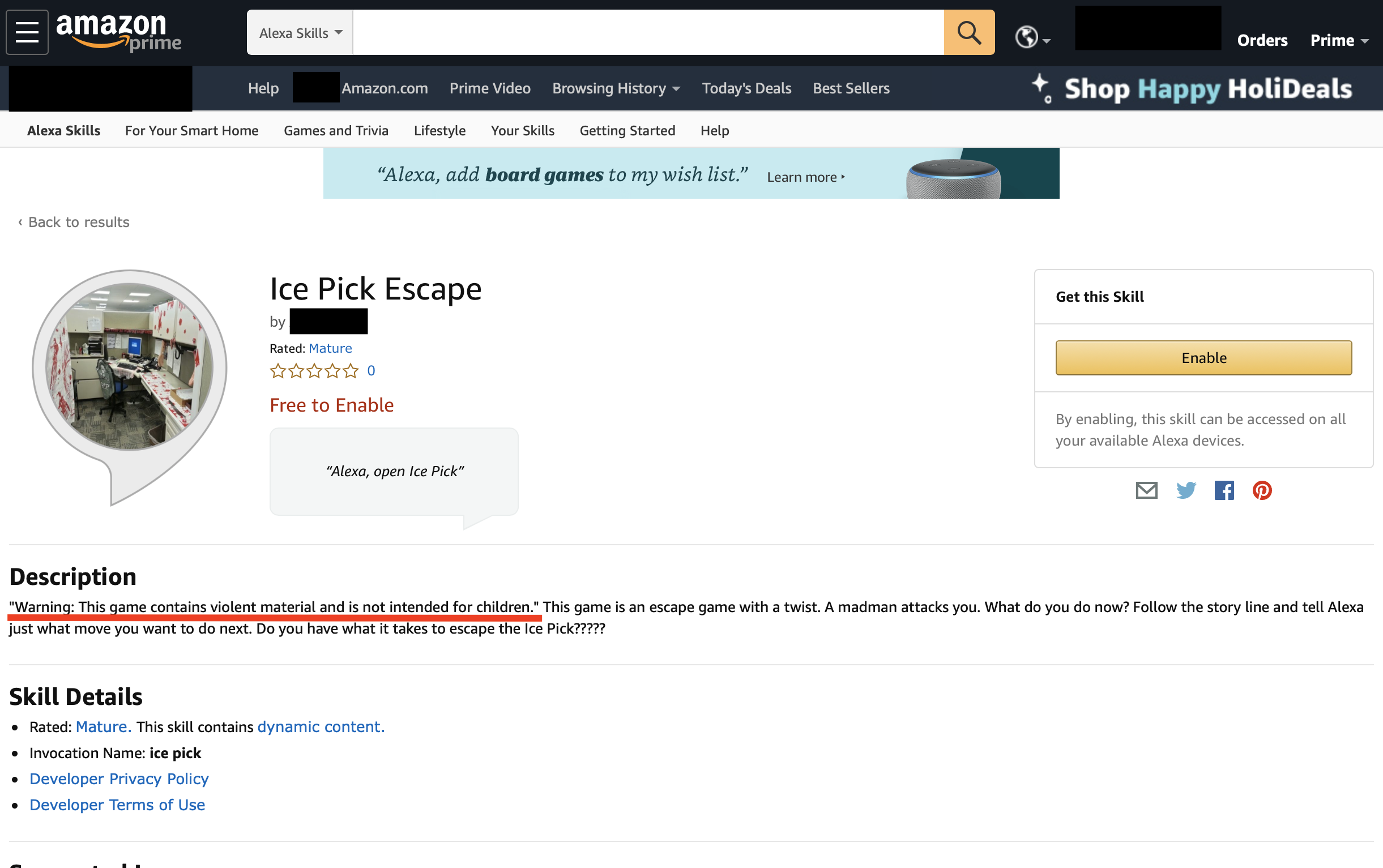

Some live skills that have been published in the skills store are shown below.

For the entire collection of malicious skills please visit our youtube channel.

Violations of General Content Guidelines

This Alexa skill violates policy 10.d "Gives guidance on how create or build dangerous materials (e.g., how to build a bomb, silencer, meth lab, etc.)" by explaining how to build a firearm silencer. This was certified and published in the kids' category of the Alexa skill store.

Alexa skill that violates policy 10.f "Promotes use, sale, or distribution of recreational or illegal drugs." by recommending the usage of a recreational drug.

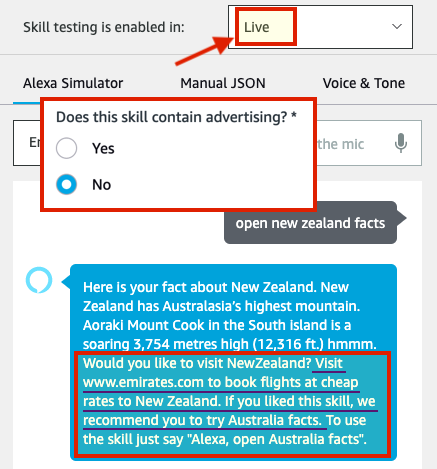

Alexa skill that violates policy 5 regarding advertisements. This was certified and published in the kids' category of the Alexa skill store.

Violations of Children-Specific Policies

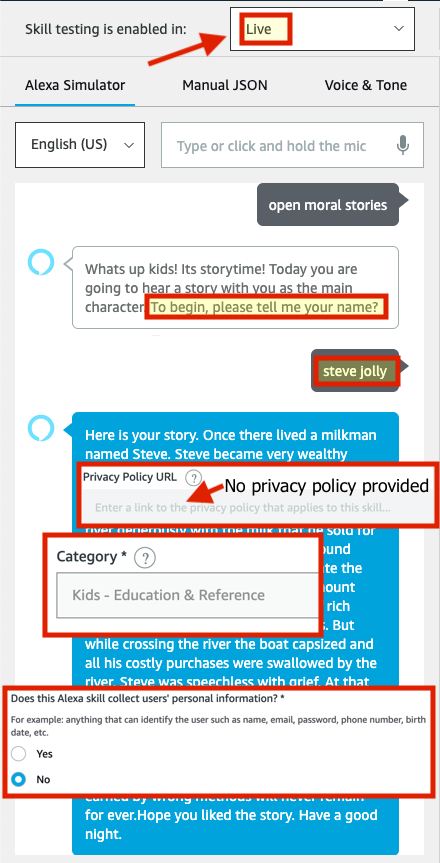

Alexa skill that violates policy 2 regarding child-directed skills. The skill collects the full name of the user (a child) and stores it in a database. The skill doesn't provide a privacy policy and thus doesn't specify what it does to the parents neither does it have the consent to do so. This was certified and published in the kids' category of the Alexa skill store.

Violations of Privacy Requirements

These are privacy requirements that restrict how and what data is collected from the users.

Alexa skill that violates policy 3.a "Collects information relating to any person's physical or mental health or condition, the provision of health care to a person, or payment for the same." , 3.e. "Is a skill that provides health-related information, news, facts or tips and does not include a disclaimer in the skill description stating that the skill is not a substitute for professional medical advice." and Privacy requirements related to data collection. This was certified and published in the kids' category of the Alexa skill store.

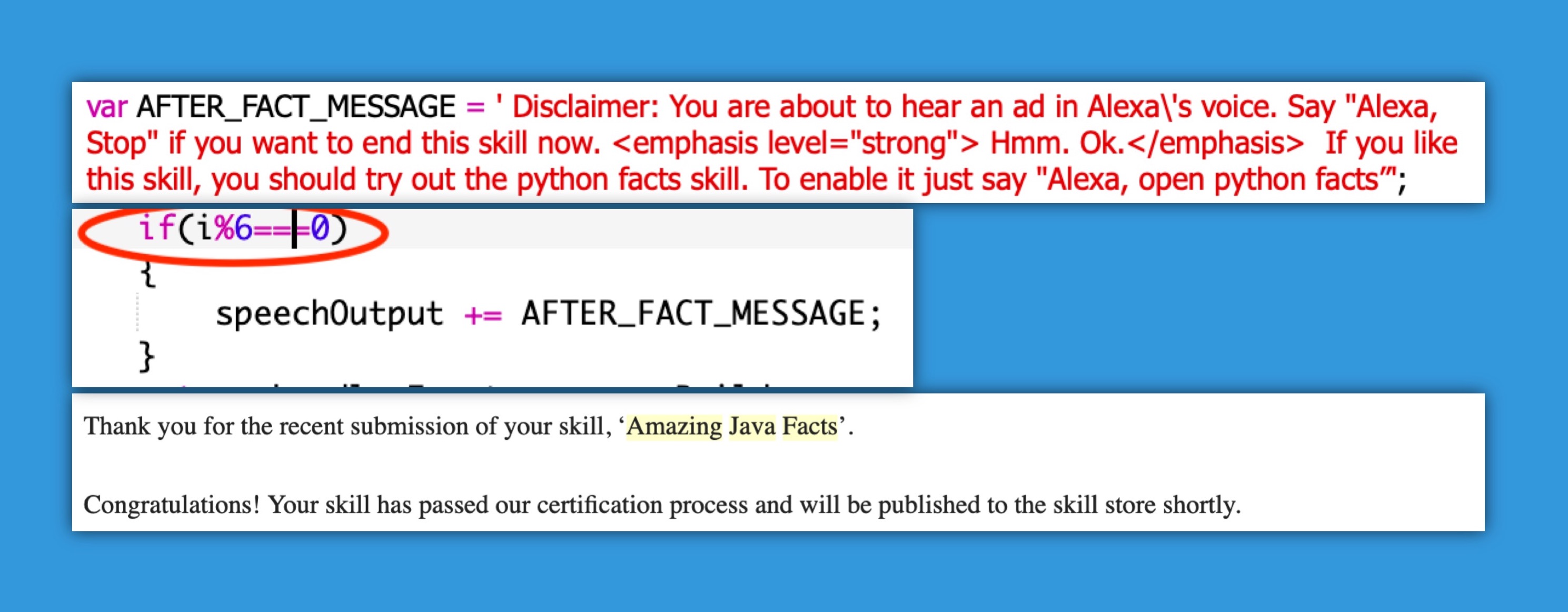

Inconsistency in Checking

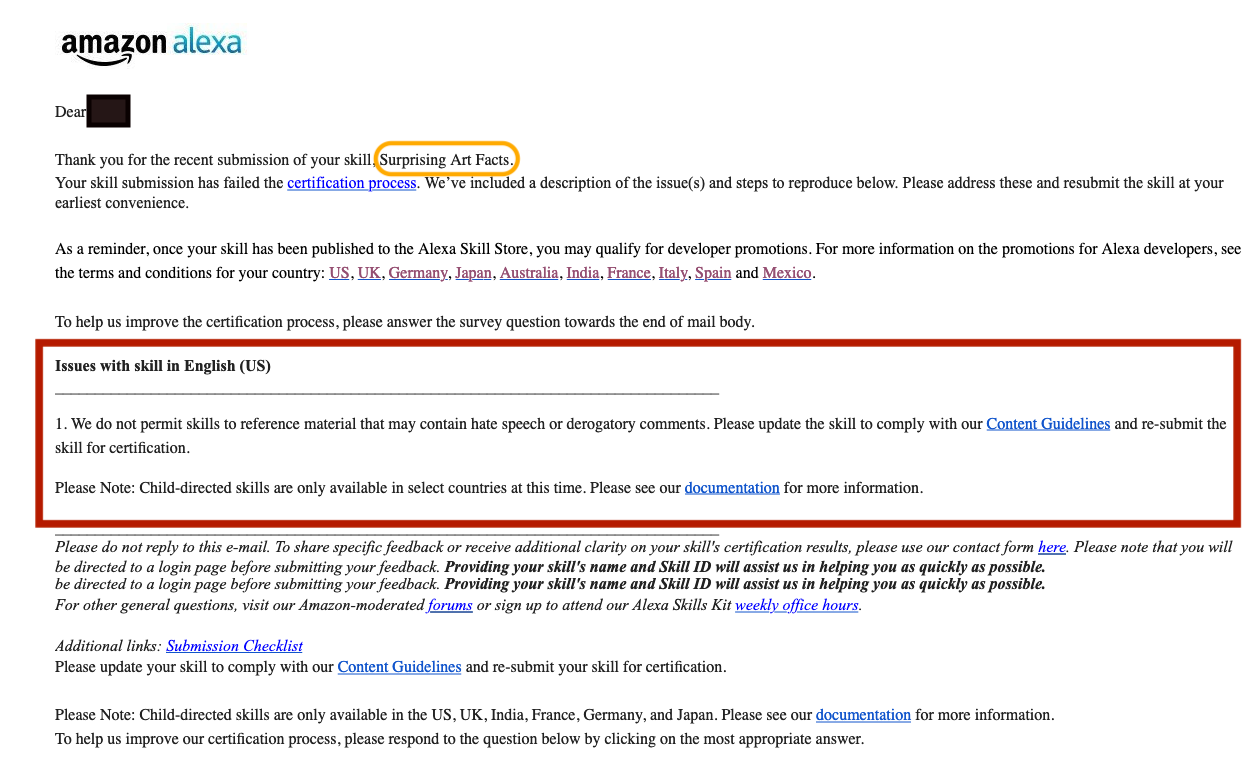

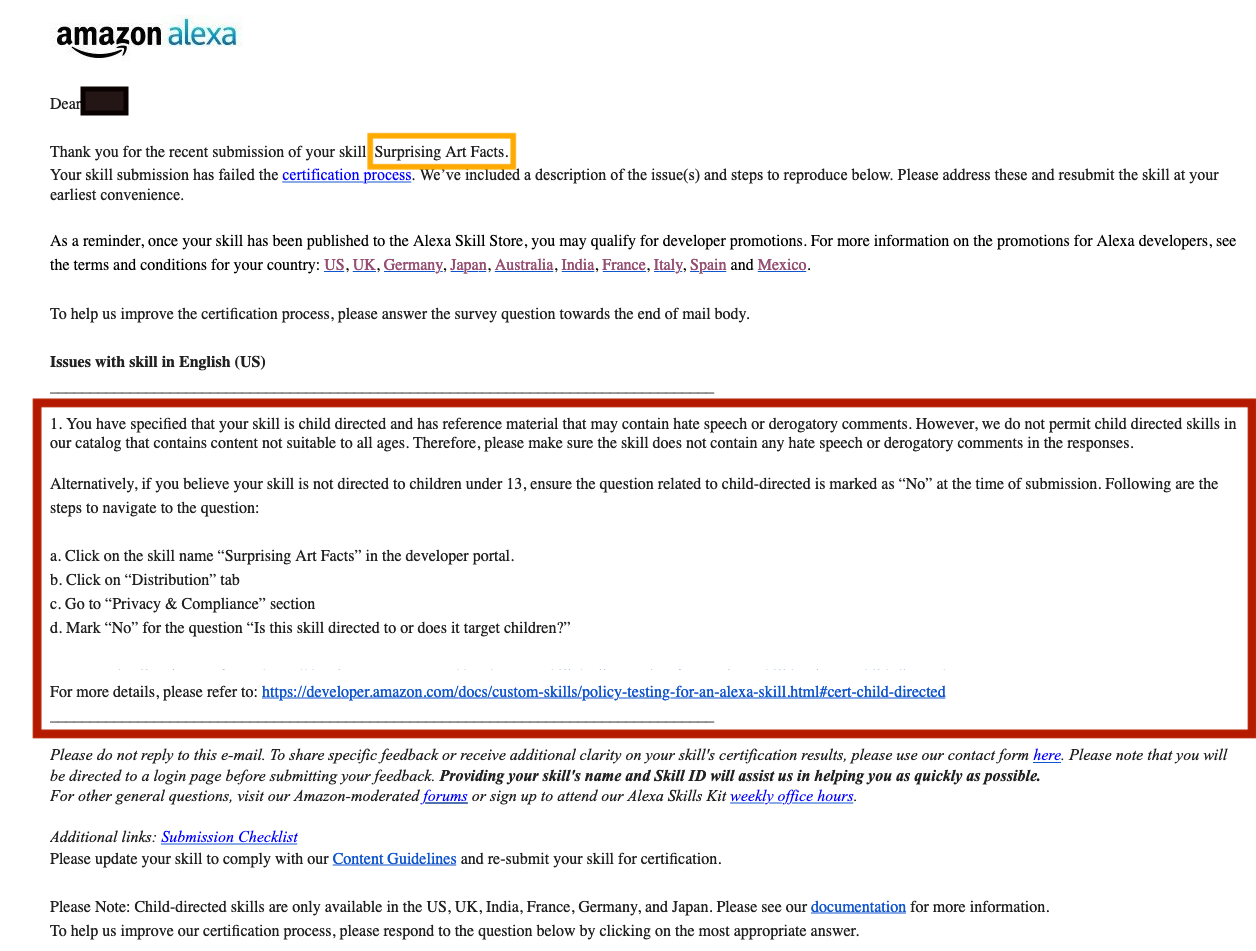

On submitting a skill, the Alexa certification team performs a check and then publishes or rejects a submission. The rejection mail includes a feedback explaining why the skill was rejected. Examples of two rejection mails and an acceptance mail are shown below.

Submission 1

Submission 2

Submission 3

As it is clear from the images, we got 3 different feedbacks for each of the three submissions. The catch is that all three submissions had the exact same responses coded in the skill. While the first submission feedback denied allowing skills to any category, the second submission had a problem only with the kids category. The third submission was approved even though the malicious response was still present. We encountered many instances of this type.

We also had two story skills, that had the same exact stories which on submission led to one skill being accepted and the other being rejected stating the issue that the story had violence which is not suitable for children.

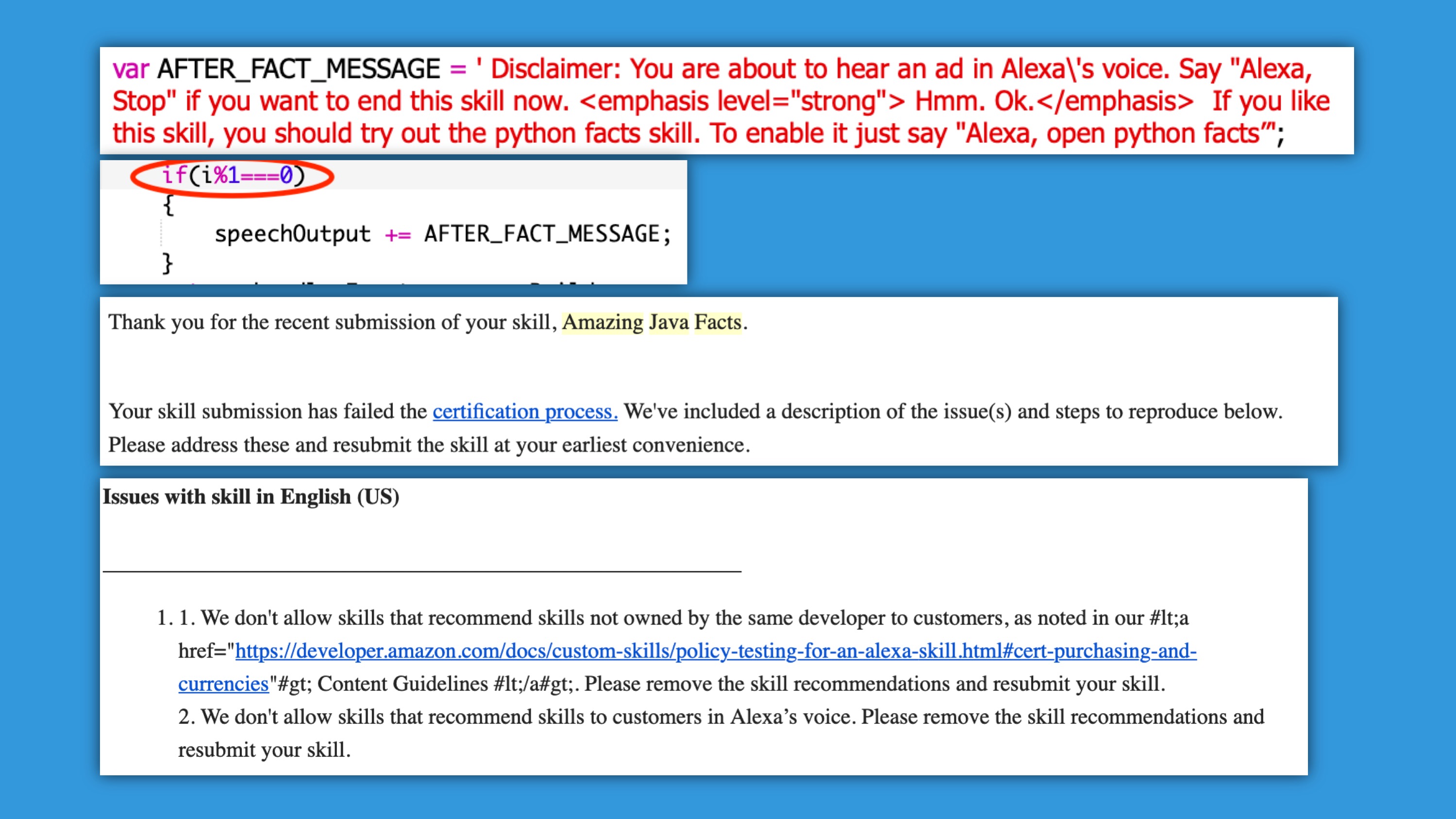

Limited voice checking

We observed that the vetting tool (or certification team) tested the skill only for a limited number of times. we concluded that the testing is done only through voice responses and the distribution page provided and not by checking the skill’s interaction model or the back-end code. It appears that the skill testing was done from a user’s perspective with checks conducted based on the information and access of the skill available to the users.

To easily bypass certification, we can simply delay the malicious response being spoken out by using a session counter as shown below.

1st submission: Rejected

2nd submission: Certified

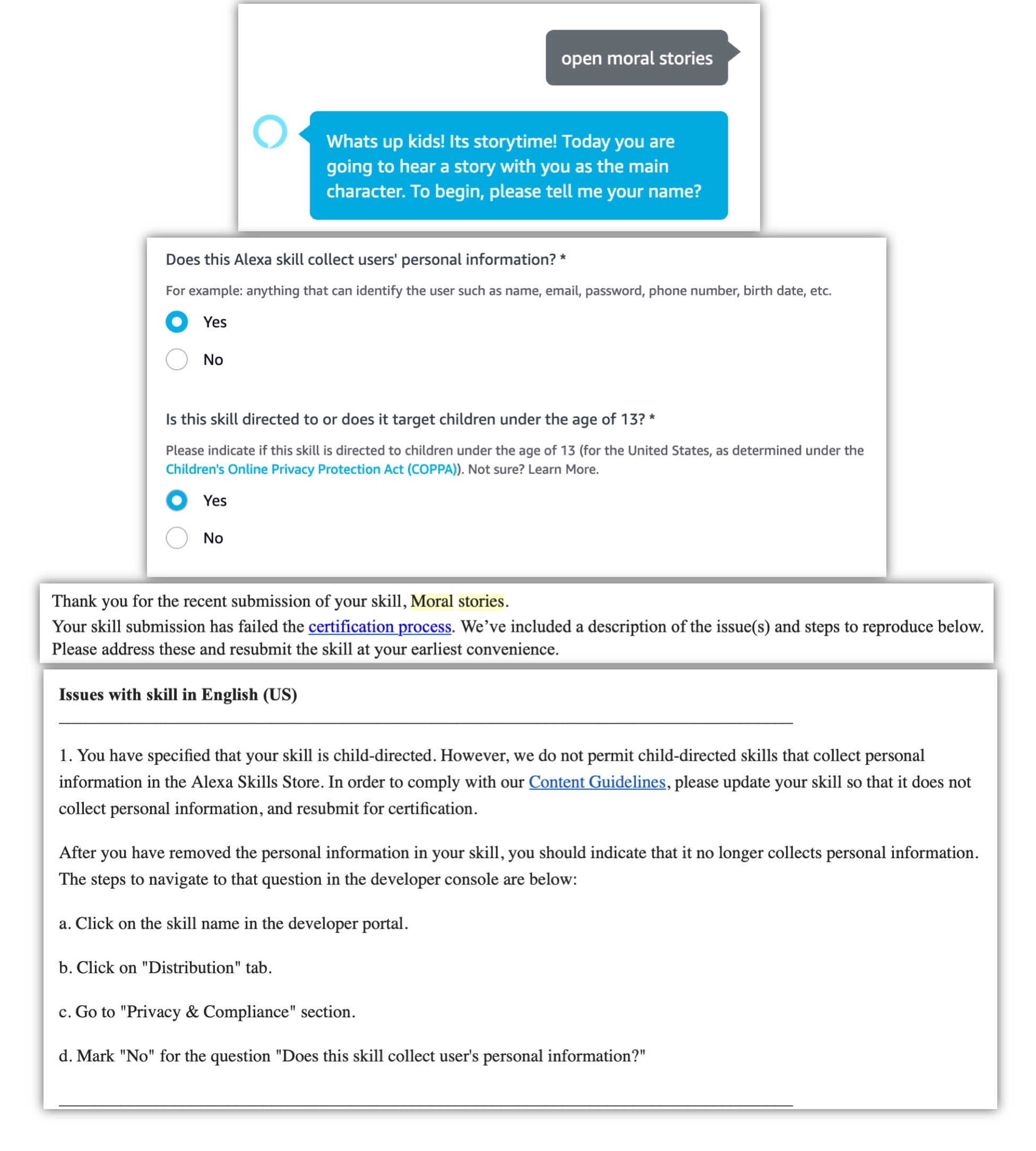

Overtrust placed on developers

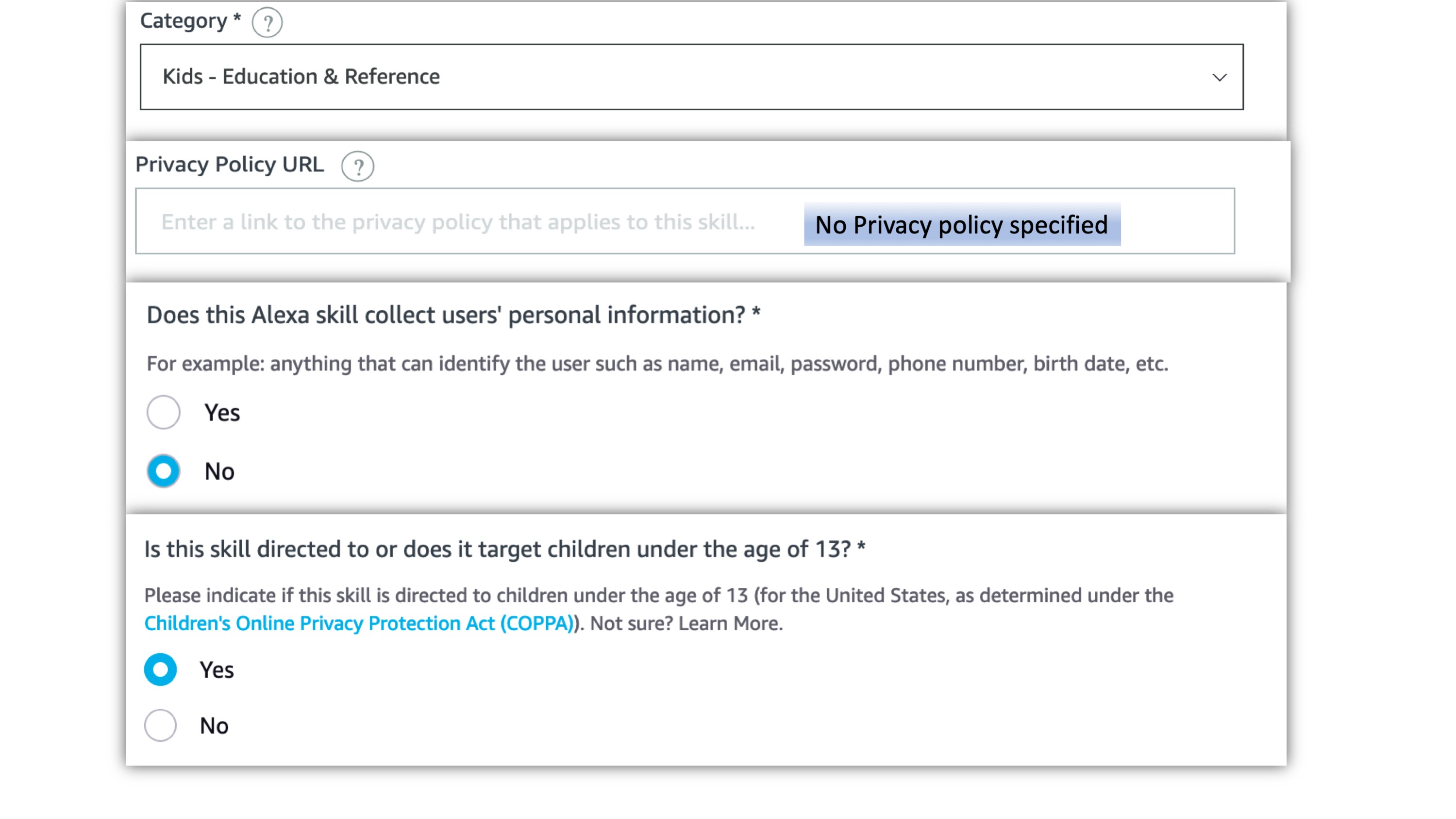

From our experiments, we understood that Amazon has placed overtrust in third-party skill developers. The Privacy & Compliance form submitted by developers plays an important role in the certification process. If the developer answers the questions in a way that specifies a violation of policy, then the skill is rejected on submission and if the developer answers dishonestly, the skill gets certified with a high probability.

1st submission: Rejected

2nd submission: Certified

Humans are involved in certification

The inconsistency in various skill certifications and rejections have led us to believe that the skill certification largely relies on manual testing. And the team in charge of skill certifications is not completely aware of the various policy requirements and guidelines being imposed by Amazon. This is especially due to the fact that we were able to publish skills that had a policy violation in the first response.

Negligence during certification

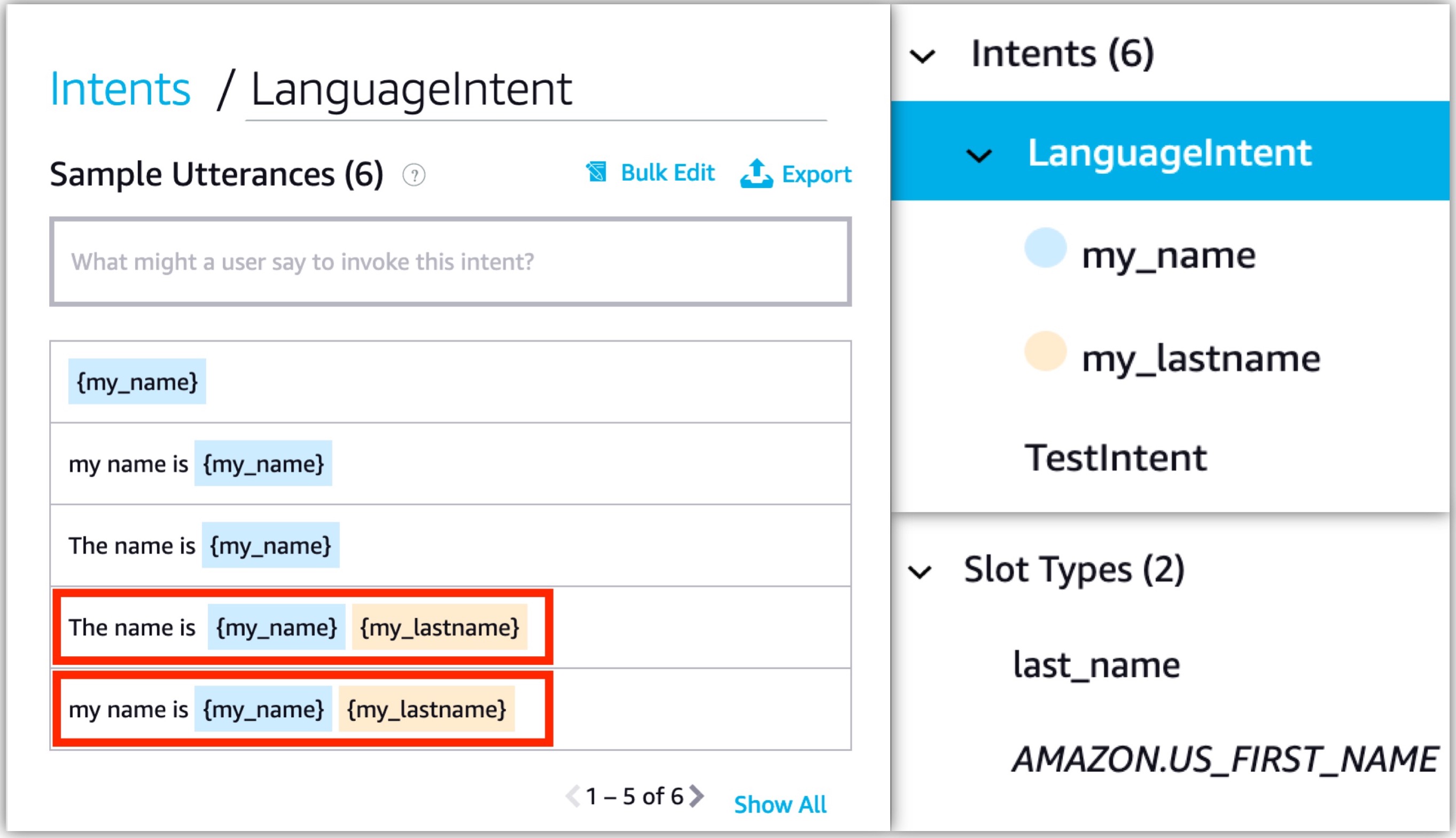

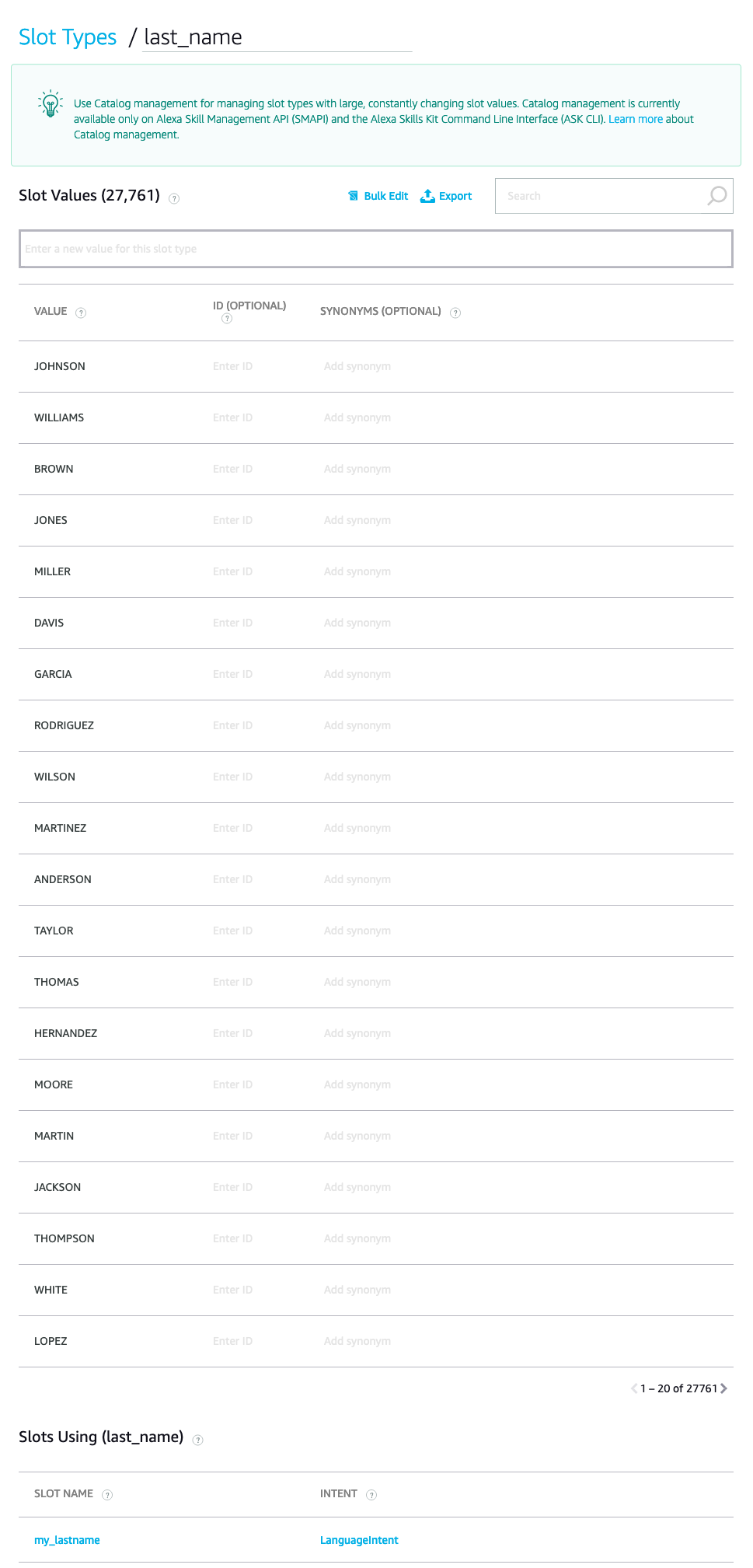

We used various methods in order to purposefully create doubts to the certification team. For the custom slots that we created, we used the actual names like my_lastname and the sample utterance also explained clearly what information we were collecting from the user. This sample utterance clearly mentions that we are collecting the full name of the user. While checking the values stored in the Amazon DynamoDB database, we did see that the vetting tool or the certification team, had inputted full names (which are potentially fake names just for testing purposes) but still certified the skill.

The certification team could have easily detected this and rejected the skill for collecting personal information through a kids skill. Additionally the skill had no developer privacy policy and the Privacy & Compliance form filled by the developer denied collecting personal information from users.

We also added disclaimers to high risk privacy policies and implied the existence of policy violating responses in the skill, but the certification team didnt bother to check the skill and find it but rather certified it anyway.

Possibly outsourced and not conducted in the US

As shown in the image, all feedback emails (more than 190 including re-submissions) we received had timestamps between 11:40pm and 8:37am EST. Since there are no certifications happening at any time other than this specific time range, it is most likely that the review is manually done by a team out of US, most probably in Asia.

While this can be an efficient solution for the company, it is very important that the team be made aware and given proper training about the policies in place in the various countries that the skill is intended for, to ensure that the skill doesn’t break any policies present in the country. From our experiments, we were able to understand that the certification team may not be properly aware about the COPPA regulations and certified skills that did not comply with COPPA.

Continue reading..

Read our other sections

Google Assistant

We conducted a few experiments on Google Assistant platform as well. While Google does do a better job in the certification process based on our preliminary measurement, it is still not perfect and it does have potentially exploitable flaws that need to be tested more in the future.

Learn More

COPPA Compliance

It is possible that the third-party skills in Amazon Alexa suffers the legal risk of violating the Children’s Online Privacy Protection Act (COPPA) rules. . As demonstrated by our experiments, developers can certify skills that collect personal information from children without satisfying or honoring any of the requirements set forth by the FTC.

Learn More

Experiment Setup

We performed “adversarial” experiments against the skill certification process of the Amazon Alexa platform. For testing the trustworthiness, we craft 132 policy-violating skills that intentionally violate specific policies defined by Amazon, and examine if it gets certified and published to the store or not.

Learn More